Interactivity in Authoring of Time & Interaction

See also: Vidéo du lancement INEDIT

The INEDIT project attempts a scientific view of the interoperability between common tools for music and audio productions, in order to open new creative dimensions coupling authoring of time and authoring of interaction. This coupling allows the development of novel dimensions in interacting with new media.

Our motivations are based on existing international efforts in computer music research in bridging the gap between “compositional” and “performative” aspects of existing tools in computer music. This division is apparent today in the common categorization of existing tools to “computer assisted composition” and “realtime” systems.

For the composer, performance oriented tools (such as synthesizers, editors, mixers, sound generators, post-production) are generally limited to metaphors of source production or processing (oscillators, filters, physical models). These metaphors, despite their productivity, are to be integrated within a global structure described by a symbolic language in analogy to a musical score, in order to permit a realistic authoring, gathering representations, concepts and their interactions.

On the other hand, composition oriented tools generally lack an undisputable dimension within new media: interactivity. There is less and less interest in creating closed/fixed mediums, and more interest in new media art for realtime interactivity with an outside environment. This is the case for example in interactive music, video games, realtime interactive sonifications, sound design, affective computing, serious gaming, multimedia installations, etc.

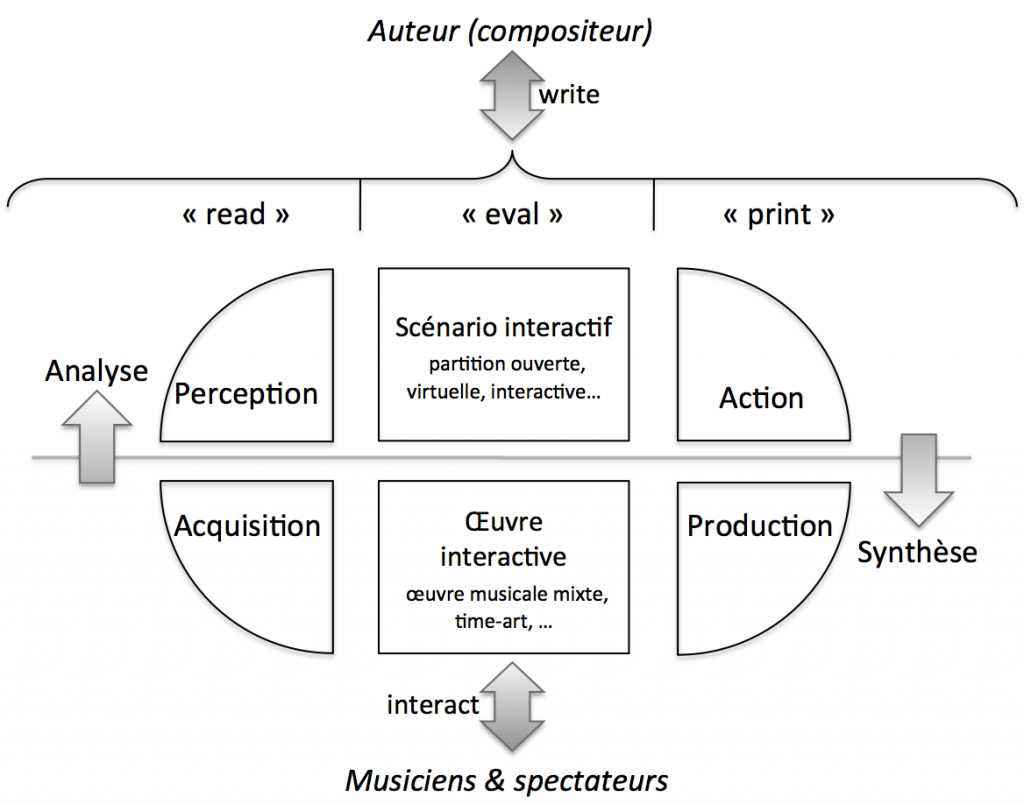

Our approach lies within a formal language paradigm: An interactive piece can be seen as a virtual interpreter articulating locally synchronous temporal flows (audio signals) within globally asynchronous event sequence (discrete timed actions in interactive composition). Process evaluation is then to respond reactively to signals and events from an environment with heterogeneous actions coordinated in time and space by the interpreter. This coordination is specified by the composer who should be able to express and visualize time constraints and complex interactive scenarios between mediums.

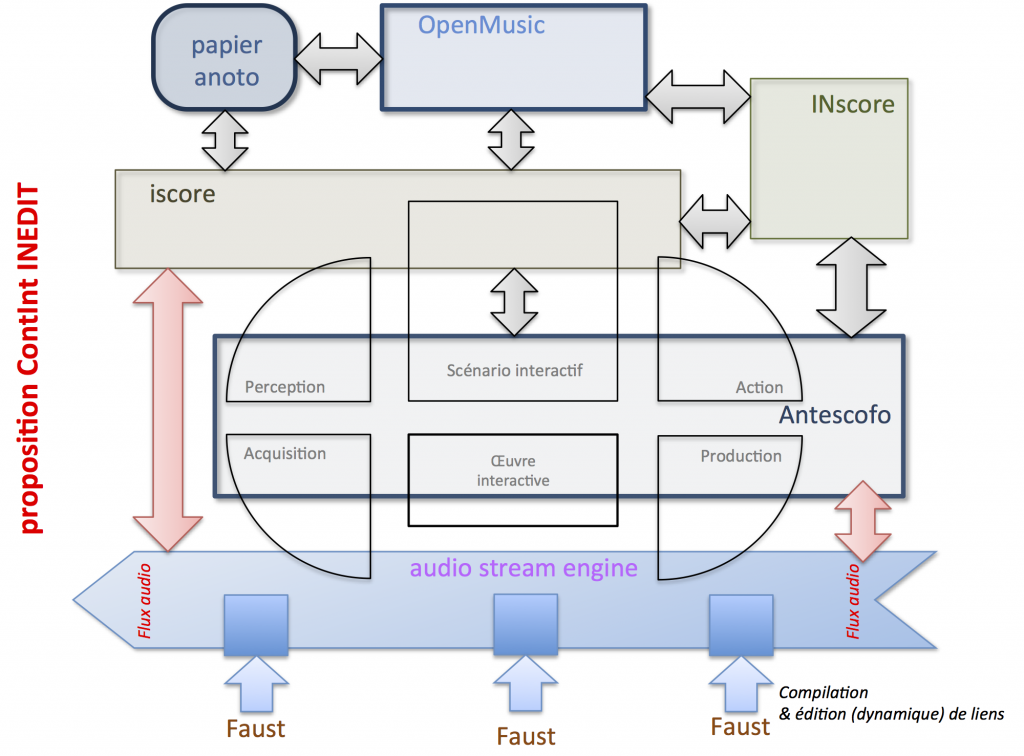

To address this issue, and in contrary to existing approaches based on specific temporal formalisms, the INEDIT project distinguishes itself by its hybrid approach: We do not attempt to reduce the rich diversity of existing approaches by imposing a yet-another-unified formalism, but instead we aim at preserving this diversity by correctly coordinating between different tools, and providing a framework addressing major aspects of a musical workflow, from composition to performance, as developed above. We base ourselves on existing tools already pioneered by our partners which occupy world-references in their respective domains: OpenMusic and iScore for the compositional phase, INScore for visualizing interactive processes, Antescofo for articulating signal/event paradigms and FAUST and LibAudioStream for synchronous audio calculi. The interoperability of such tools would enrich existing workflows and would be impossible within one single formalism or application.

To achieve this, we based ourselves on the development of novel technologies: dedicated multimedia schedulers, runtime compilation, innovative visualization and tangible interfaces based on augmented paper, allowing the specification and realtime control of authored processes. Among posed scientific challenges within the INEDIT project is the formalization of temporal relations within a musical context, and in particular the development of a GALS (Globally Asynchronous, Locally Synchronous) approach to computing that would bridge in the gap between synchronous and asynchronous constraints with multiple scales of time, a common challenge to existing multimedia frameworks.